We can help you navigate the evolving scholarly publishing process — not only for your “final” manuscript, but also your other critical research and publishing outputs, such as: preprints, data, interactive models, conference proceedings, posters, working papers, blog posts, and much more.

More information

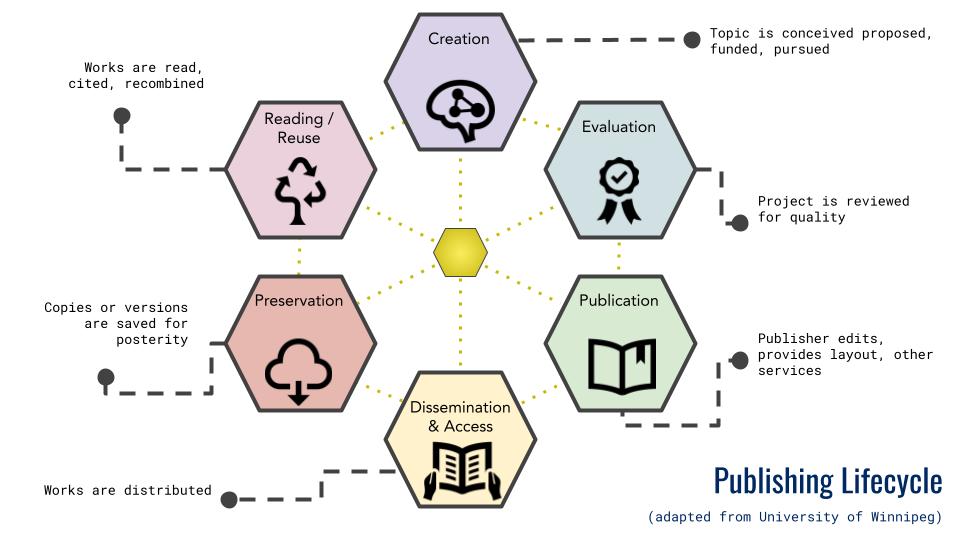

The lifecycle

Publishers

You want to build your academic reputation, but how do you know to what journals or academic presses you should submit your work? You’ll want to consider norms in your field, recommendations from peers or advisors, and the extent of your desire for open access.

We’ve put together some guidelines.

Evaluating journals

With journal publishing, you will often be making choices based on the “impact” of various journals — meaning how those journals are recognized and perceived in the scholarly community, the frequency of citation of articles from those journals, and the like. (We discuss various statistical measures of impact in the Research Impact and Scholarly Profiles section.) But you should also consider impact in terms of openness. That is: Who can access the scholarship being published by that journal? Is it open for reading by all, or confined to only those institutions able to pay?

Gauging journal subject matter fit and impact

If you’re unfamiliar with the journals in your field, there are comparison tools that can help with the evaluation process:

- Journal Citation Reports: JCR provides citation data for journals across nearly two hundred subject categories. You can browse by subject category or by known title. JCR enables you to identify journals with high impact factors, understand the ranking of journals within a subject category, and more.

- Eigenfactor.org (Opens in a new tab.): Offers valuable information about the Eigenfactor Score and the Article Influence Score for various journals. You can also explore the cost effectiveness of journals for both subscription journals (which search ranks subscription-based journals according to the value-per-dollar that they provide) and open access journals (which compares the article-processing fees charged by open access journals).

- CiteScore: Identify and compare journal impact metrics across a wide range of journal titles and disciplines.

- UlrichsWeb: Provides key information about journals’ publishing frequency, location, audience, peer review status and more.

Evaluating open access journals

If you’re interested in open access publishing, but unfamiliar with a particular OA journal you’ve come across, you can also find out more about it by checking these additional sites:

- Is the journal included in the Directory of Open Access Journals (DOAJ)? DOAJ is comprehensive, “community-curated online directory that indexes and provides access to high quality, open access, peer-reviewed journals.” To be included, journals must be peer-reviewed or employ editorial quality control. This also means the journals do not employ deceptive marketing practices to solicit papers to get the article processing charge that authors may pay. (See our page on open access publishing models.)

- Is the publisher a member of the Open Access Scholarly Publishers Association (OASPA)?

- The Scholarly Communication Toolkit page on Evaluating Journals also has tremendous information about how to select open access journals for publication.

Concerned about deception?

If you’ve checked the above sources, but still have questions about the legitimacy of a journal solicitation you’ve received, there are several ways you can screen for propriety.

- Are you getting confusing spam? If you’ve been receiving unsolicited e-mails from journals that are not indexed in the above reputable sources, this may be an indication of deceptive practices.

- Have you checked for deceptive characteristics? Researchers in 2017 identified various characteristics of deceptive journals. They went on to summarize these as: “low article-processing fees (less than US$150); spelling and grammar errors on the website; an overly broad scope; language that targets authors rather than readers; promises of rapid publication; and a lack of information about retraction policies, manuscript handling or digital preservation. Manuscript submissions by e-mail and the inclusion of distorted images are also common.”

- Have you done a “Think, Check, Submit?” Thinkchecksubmit.org, a campaign from many leading open access publishers, also helps researches identify trusted journals for their research by offering them a simple checklist to assess journal or publisher credentials. This is another great way to evaluate journal quality and spot unscrupulous activity. In addition to their checklist, you can check out their Think, Check, Submit video:

Remember, we can help! If you’re not sure about a journal, email us at schol-comm@berkeley.edu. And you can always consult advisors or subject specialist librarians in your field to provide more tailored advice.

Evaluating academic presses

Choosing a book publisher can be daunting, especially if you are looking to be published for the first time. The most useful advice and guidance will likely come from peers, colleagues, and academic advisors familiar with publishing in your discipline. They’ll be most knowledgeable about the logistics, publishing terms, marketing efforts, and prestige of particular presses.

Another way to get started is to consult resources that reveal various presses’ goals, target audiences, and interests. Some of the best resources that do that are the publishers' catalogs — that is, inventories and descriptions of the books they’ve published.

- American Association of University Presses (AAUP) has a list of member university presses. By going to the websites of particular publishers, you can find these catalogs and see exactly what the press is publishing in your discipline.

- Not sure which publishers’ websites to look at? AAUP also has a Subject Area Grid that identifies the interest areas of member publishers.

Explore presses with open access programs

Increasingly, presses offer open access book publishing. Open access books have tremendous potential to increase your readership and impact, while also still fostering print sales for readers who prefer it. They also can facilitate advanced media innovation in the publishing process.

With open access books, as with some open access journals, there may be an author fee assessed as a cost recovery mechanism for the press — given that they may sell fewer print copies to libraries since the book will be made available openly online. At UC Berkeley, we have a program that subsidizes any such fees! Check out our Get Funding to Publish Open Access page for details.

Other networking

Finally, there’s some networking you could do. Anali Perry of Arizona State University, on the Select a Venue page of her Getting Published guide, offers some great advice for outreach that can lead to a more streamlined press selection process.

As she explains:

If you’re attending conferences, you can set up meetings with editors to review a book idea and discuss whether this might be of interest. Another option is to contact editors directly with book ideas, written as a long essay (in the style of the press’s book catalog) stating the problem, what are you proposing, and how it is yours. Do this before writing the entire book - it’s better to work with an editor while you’re writing the book, not after. You can also be in contact with more than one publisher until you decide to accept an offer — just be honest that you’re investigating multiple options.

You can also check out this video from the AAUP. In 2015, AAUP convened a virtual panel to “take the scary out of scholarly publishing.” Their experts discussed tips and strategies for working with scholarly presses throughout the publishing process.

Questions?

Contact us to set up a consultation!

Peer review

What is “peer review”?

At its core, peer review (or the process called “refereeing”) is the effort of scholars within a similar discipline or area of research to critique and evaluate the scholarly contribution from others within that same domain, and determine whether that scholarship should be disseminated or how it can be improved. Peer review results in over 1.5 million scholarly articles published each year.

Journals differ in the percentage of submitted papers that they accept and reject. Higher impact factor journals such as Science or Nature can reject even good quality research papers if an editor deems it not ground-breaking enough. Other journals, such as PLoS One, instead take the approach of getting more scholarship out and circulated. They have utilized a review process that focuses on satisfaction of scientific rigors rather than assessment of innovativeness.

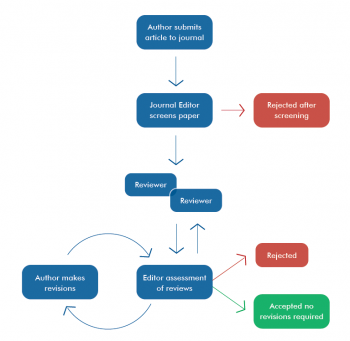

Basic models for peer review

As scholarly publishing changes, so too have peer review models. Typically, though, peer review involves authors (who conduct research and write the manuscript), reviewers (“peers” in the domain who provide expert opinions and advice), and editors (who make acceptance and publishing decisions). A basic model could like like the following, though there are multiple approaches.

In this model: A paper is submitted to a journal. A journal editor screens the manuscript to determine whether it should be passed through to the critique stage, or rejected outright. The editor collects reviewers who then undertake analysis and critique of the work. The reviewers pass opinions and suggested edits back to the editor, who asks the author to revise accordingly. This process of revision could go through several iterations. After author revisions are complete, the editor will decide whether to accept the paper for publication, or reject it.

Note, too, that some publishers have implemented a “cascading” approach so as not to squander reviewers’ efforts if a paper is ultimately rejected by an editor at the final stage. As Dan Morgan, Digital Science Publisher at the University of California Press, explains (at p. 10 of the Standing up for Science 3 guide to peer review):

Cascading peer review (a.k.a. ‘waterfall peer review’) is when a paper that has been rejected after peer review is passed on to another journal along with the reviewers’ reports. The peer review process at the second journal can be kept relatively short because the editor considers the reports from an earlier round of peer review, along with any new reviews. Variations on this process exist, according to the type of journal — but essentially reviews can ‘cascade’ down through various journals.

Cascading peer review can accelerate the time to publication so that valuable review efforts are not lost. Moreover, many publishing groups that issue multiple journals will automatically apply this process — helping to find the right journal for your particular manuscript.

Transparency

Within this basic peer review model, journals can employ different approaches to how and whether authors get to know their reviewers, and vice versa. The idea behind masking or revealing this information is that such knowledge may introduce bias, or affect how honest and critical the reviews are. These various approaches include, for example:

- Single-blind review: Reviewers know who authors are, but authors do not know who reviewers are.

- Double-blind review: Neither reviewers nor authors are informed about who the others are.

- Open review: Reviewers and authors know who each other are, and this review can also include the transmission of reviewer commentary in the open final publication.

- Post-publication open review: Here, readers and reviewers can submit public comments on published articles. Often, these comments are mediated by the editor.

If working papers are uploaded to a repository (such as ArXiv for mathematics, physics, and non-life sciences), there is also an opportunity for pre-publication peer review via the comments submitted by readers and downloaders at those sites.

Learn more

You can learn a lot more about the mechanics of peer review, and tips for how to conduct peer review, in the following guide:

- Peer Review the Nuts-and-Bolts: A Guide for Early Career Researchers (Standing up for Science 3, 2017)

And you can contact with questions at schol-comm@berkeley.edu!

Research impact

Why are we talking about impact?

Among other things, awareness of your scholarly impact can help you:

- Strengthen your case when applying for promotion or tenure.

- Quantify return on research investment for grant renewals and progress reports.

- Strengthen future funding requests by showing the value of your research.

- Understand your audience and learn how to appeal to them.

- Identify who is using your work and confirm that it is appropriately credited.

- Identify collaborators within or outside of your subject area.

- Manage your scholarly reputation.

Measuring your impact

Measuring impact is not a perfect science, and there are many who argue against its implications altogether. Here, we just want to present information about the statistical measures that exist so that you can make informed decisions about how and whether to gauge your impact.

Often, measuring impact relies on metrics such as: article-level metrics, author-level metrics, journal or publisher metrics, and alt-metrics.

Article-level metrics

Article-level metrics quantify the reach and impact of published research. For this, we can look to various measures such as citation counts, field-weighted citation impact, or social networking readership statistics.

-

e.g. Citation count: How many times has your article been cited? This can be difficult to assess and assign meaning to. How recent your article is obviously affects how many times it’s been cited. Additionally, the database or source of the statistic greatly impacts the count because the database needs to be able to scan a large number of possible places where your article could be cited — and not all databases have access to the same information in that regard.

-

e.g. Field-weighted citation impact: Since it takes time for publications to accumulate citations, it is normal that the total number of citations for recent articles is lower. Moreover, citations in research from one field may accumulate faster than others because that field simply produces more publications. Therefore, instead of comparing absolute counts of citations you might want to consider another citation measure called field-weighted citation impact (also known as FWCI) that adjusts for these differences. Field-weighted citation impact divides the number of citations received by a publication by the average number of citations received by publications in the same field, of the same type, and published in the same year. The world average is indexed to a value of 1.00. Values above 1.00 indicate above-average citation impact, and values below 1.00 likewise indicate below-average citation impact. It’s a proprietary statistic, though, meaning you’d need access to Elsevier’s SCOPUS product, which UC Berkeley provides.

-

e.g. Social Networking Site Readership: Another article-level metric is something like Mendeley Readership, which indicates the number of Mendeley users who have added a particular article into their personal library. This number can be considered an early indicator of the impact a work has, and typically Mendeley readership counts correlate moderately with future citations.

Author-level metrics

Author-level metrics address an author’s productivity and diversity of reach. We can look to measures of overall scholarly output, journal count, journal category count, and H-index or H-graph.

-

e.g. Journal count: Journal count indicates the diversity of an author’s publication portfolio: In how many of the distinct journals have this author’s publications appeared? This can be useful to show the excellence of authors who work across traditional disciplines and have a broad array of journals available in which to submit.

-

e.g. Journal category count: Journal category count addresses in how many journal categories has someone published. This can be useful for tracking breadth/reach of scholarship, and inter-disciplinariness.

- e.g. H-index: H-index is an author-level metric that attempts to measure both the productivity and citation impact of the publications of a scientist or scholar. The definition of the index is that a scholar with an index of h has published h papers, each of which has been cited in other papers at least h times. It is believed that after 20 years of research, an h index of 20 is good, 40 is outstanding, 60 is truly exceptional.

-

e.g. Scholarly output: Scholarly output demonstrates an author’s productivity: How many publications does this author have? This is a good metric for comparing authors who are similar, and at similar stages of career.

Journal or publisher metrics

Journal or publisher metrics address weights or prestige that particular publications are seen to carry. Some measures include:

-

e.g. SCImago Journal & Country Rank: SCImago Journal & Country Rank can be considered the “average prestige per article,” and is based on the idea that not all citations of your work are the same. (In other words, your articles could be cited in publications of varying prestige.) Here, the subject field, quality, and reputation of the journals in which your publications are cited have a direct effect on the “value” of a citation.

-

e.g. Impact per publication (IPP): IPP gives you a sense of the average number of citations that a publication published in the journal will likely receive. It measures the ratio of citations per article published in a journal. Unlike the standard impact factor, the IPP metric uses a three-year citation window, widely considered to be the optimal time period to accurately measure citations in most subject fields.

-

e.g. Source-normalized impact per paper: When normalized for the citations in the subject field, the raw Impact per Publication (IPP) becomes the Source Normalized Impact per Paper (SNIP). SNIP measures contextual citation impact by weighting citations based on the total number of citations in a subject field. The impact of a single citation is given higher value in subject areas where citations are less likely, and vice versa.

Altmetrics

Altmetrics account for “non-traditional” citations of your scholarly work. They address the fact that scholarly conversations have expanded beyond the peer-reviewed article. People are now Tweeting and blogging about your articles, for instance, and altmetrics accumulate these mentions. To find out how your work is being cited and used in these ways, learn more at Altmetric.com.

Monitoring your impact

There are numerous existing and emerging tools available to help you track your scholarly impact by enabling you to create a virtual scholarly profile in which you input and keep track of all your professional activities and publications.

When selecting one of these tools, it’s helpful to consider:

- What sources of information are your chosen tools “pulling from” or indexing? The greater number of sources that the tool can “read,” the more comprehensive your metrics will be.

- What is the business model of your tool? Is it for-profit and available with premium features for a fee, or is it a free platform available to all? For instance, Symplectic’s Elements and Elsevier’s Pure are licensed platforms that come often at substantial cost to an institution, whereas Impact Story, ORCID, and Google Scholar offer free profile services.

- Have you made a copy of your scholarly materials available also through your institutional repository? Many of the profiling tools are not geared toward actually preserving a copy of your work. So, to ensure that a copy of your work remains publicly available, it’s best to make sure you also deposit a copy in your institutional repository (in the case of UC, this is eScholarship.org).

With all that in mind, here are a few profiling tools from which you can choose:

-

From their site: Impactstory is an open-source website that helps researchers explore and share the the online impact of their research.By helping researchers tell data-driven stories about their work, we're helping to build a new scholarly reward system that values and encourages web-native scholarship. We’re funded by the National Science Foundation and the Alfred P. Sloan Foundation and incorporated as a 501(c)(3) nonprofit corporation.

-

From their site: ORCID provides an identifier for individuals to use with their name as they engage in research, scholarship, and innovation activities. We provide open tools that enable transparent and trustworthy connections between researchers, their contributions, and affiliations. We provide this service to help people find information and to simplify reporting and analysis.

-

From their site: Google Scholar Citations provide a simple way for authors to keep track of citations to their articles. You can check who is citing your publications, graph citations over time, and compute several citation metrics. You can also make your profile public, so that it may appear in Google Scholar results when people search for your name...Best of all, it's quick to set up and simple to maintain - even if you have written hundreds of articles, and even if your name is shared by several different scholars. You can add groups of related articles, not just one article at a time; and your citation metrics are computed and updated automatically as Google Scholar finds new citations to your work on the web. You can choose to have your list of articles updated automatically or review the updates yourself, or to manually update your articles at any time.

-

From their site: Share your publications, access millions more, and publish your data. Connect and collaborate with colleagues, peers, co-authors, and specialists in your field. Get stats and find out who's been reading and citing your work.

-

From their site: Academia.edu is a platform for academics to share research papers. The company's mission is to accelerate the world's research. Academics use Academia.edu to share their research, monitor deep analytics around the impact of their research, and track the research of academics they follow.

-

From their site: LinkedIn operates the world’s largest professional network on the internet with more than 500 million members in over 200 countries and territories.

-

Fee-based or proprietary profiling systems like Elements or Pure.

These are software systems to help collect, understand, and showcase scholarly activities. These are not currently available at UC Berkeley.

Increasing your impact

In general, we recommend three overarching strategies to increase your scholarly impact:

A. Get your work seen and cited.

B. Promote your work and be social.

C. Develop and execute a personal plan.

We discuss each of these strategies with specifics below.

A. Get your work seen and cited

Publish pre-prints or post-prints in open access repositories.

Institutional or discipline-specific open access repositories (e.g. eScholarship.org for UC publications, BioArXiv, Humanities Commons, etc.) enable you to self-archive a copy of your work so that it is accessible for free by readers around the world. Moreover, these repositories are indexed on Google so that your scholarship can easily be found. This is a terrific way to build readership and impact, while also contributing to progress and knowledge by making a version of your work available to all. To choose a repository that’s right for you, you can check the DOAR (Directory of Open Access Repositories).

As a UC faculty member, staff, or student, you are automatically authorized under the UC open access policies to post a pre-print copy of your scholarly articles (defined broadly) to the UC repository, eScholarship. You can also check the web tool Sherpa/ROMEO to determine whether there are other versions of your scholarship that your publisher has authorized for deposit.

Publish open access.

Open access is the free, immediate, online availability of scholarship. This means that when people publish a scholarly article in an open access journal, it is put online for anyone to access — without readers (or readers’ institutions) having to pay any fees or subscription charges for it (also known as “paywalls”).

Paywalls limit readership. The great value of publishing open access means that barriers between readers and scholarly publication are removed, making it easier for everyone to find, use, cite, and build upon knowledge and ideas. In this way, open access connects your scholarship to the world, and helps build your impact. Publishing open access is often a condition of research funding, so you should check your grants.

Open access publishers may ask for a fee to publish your scholarship open online in lieu of the fees they would ordinarily have collected from institutional memberships to the journal or publication. The UC Berkeley Library has a fund to cover these costs. You can learn more in our BRII (Berkeley Research Impact Initiative) Guide about applying for this funding.

There’s an open access place for all research outputs.

Your “final” publication — traditionally, an article, chapter, or scholarly monograph — is not the only thing readers desire to access and cite. You can publish your research data, code, software, presentations, working papers, and other supporting documents and documentation open access as well. In fact, in some cases, your funders might require it. Sharing these other research instruments not only advances knowledge and science, but also can help increase your impact and citation rates.

You can find the right open place for all your outputs. For instance, it’s possible to:

- Publish code on GitHub.

- Publish data sets on FigShare or Dryad.

- Publish presentations on SlideShare.

Publish several pieces on same topic.

If you’ve written a journal article, you can spread the word about it by supplementing it with a blog post or magazine article — thereby attracting greater attention from readers interested in your topic. What’s more, publishing your article open access to begin with also helps your work get discovered by journalists, making it easier for them to write their own supplemental magazine articles about your research, too.

Write for your audience and publish in sources they read.

Of course, many of us would like to be able publish in high impact journals or ones targeted to our audience. To find the best fit journals, it can be helpful to review the journal’s scope and submission criteria, and compare that to whom you believe your intended audience to be.

Use persistent identifiers to disambiguate you and your work from other authors.

There are more than 7 billion people in the world. If someone searches for your articles by your name, how can you be sure that they find yours and not someone else’s? How can you be sure that citations really reflect citations of your work and not someone else’s? Persistent identifiers — both for you and your publications — help disambiguate the chaos.

- ORCID: Much in the same way that a social security number uniquely identifies you, an ORCID “provides a persistent digital identifier that distinguishes you from every other researcher and, through integration in key research workflows such as manuscript and grant submission, supports automated linkages between you and your professional activities ensuring that your work is recognized.” Increasingly, publishers and funders ask for your ORCID upon article submission or application so that they can disambiguate you from other researchers, too. ORCIDs are free to create and doing so takes just moments. They also enable you to set up a personal web profile page where you can link all of your scholarship to your unique identifier — creating a profile that is uniquely yours.

- Digital Object Identifiers (DOIs): A DOI is a type of persistent identifier used to uniquely identify digital objects like scholarly articles, chapters, or data sets. Metadata about the digital object is stored in association with the DOI, which often includes a URL where the object can be found. The value of the DOI is that the identifier remains fixed over the lifetime of the digital object even if you later change the particular URL where your article is hosted. Thus, referring to an online document by its DOI provides more stable linking than simply using its URL. Publishers and repositories often assign DOIs to each of your publications for this reason. If you are a UC Berkeley researcher depositing in eScholarship, you can obtain a DOI through a service called EZID.

B. Promote your work and be social

Although it might seem too self-laudatory for some people’s tastes, speaking up about issues of interest to you and your audience can help position you as a thought leader in your space. Therefore, it can be helpful to participate and collaborate in promoting and discussing your work through social networking, blogging, list serves, personal networks, and more.

And don’t overlook your research that’s still underway! Discussing what’s in progress can help build interest.

C. Develop and execute a personal plan.

Perhaps the best way to increase your impact is to develop a plan that is tailored for your own needs, and check in with yourself periodically about whether it’s working. Your plan should focus on tactics that make your work visible, accessible, and reusable.

What might such a plan look like? Here is a sample that you can adapt.

- Create and maintain an online profile (GoogleScholar, etc.).

- Use persistent identifiers (e.g. ORCIDs, DOIs) to disambiguate/link.

- Publish in fully OA journals or choose OA options.

- Creative Commons license your work for re-use.

- Post pre- or post-prints to repositories (eScholarship, PubMed Central, etc.).

- Make social media engagement a habit.

- Engage your audience in meaningful conversations.

- Connect with other researchers.

- Appeal to various audiences via multiple publications.

- Check back in on your goals.

Questions?

Do you want to talk more about tailoring strategies so that they are right for you? Please contact us at schol-comm@berkeley.edu!

Research data

You’ve invested significant time and resources into preparing your final publication. So, after peer review, you’re done, right? Not necessarily. You may desire (or be required) to also publish the data underlying your research.

Why should we care about publishing data?

Sharing research data promotes transparency, reproducibility, and progress. In some fields, it can spur new discoveries on a daily basis. It’s not atypical for geneticists, for example, to sequence by day and post research results the same evening — allowing others to begin using their datasets in nearly real time (see, for example, Pisani and AbouZahr’s paper). The datasets researchers share can inform business or regulatory policymaking, legislation, government or social services, and more.

Publishing your research data can also increase the impact of your research, and with it, your scholarly profile. Depositing datasets in a repository makes them both visible and citable. You can include them in your CV and grant application biosketches. Conversely, scholars around the world can begin working with your data and crediting you. As a result, sharing detailed research data can be associated with increased citation rates (check out this Piwowar et al. study, among others).

Publishing your data may also be required. Federal funders (e.g. National Institutes of Health), granting agencies (e.g. Bill and Melinda Gates Foundation), and journal publishers (e.g. PLoS) increasingly require datasets be made publicly available — often immediately upon associated article publication.

How do we publish data?

Merely uploading your dataset to a personal or departmental website won’t achieve these aims of promoting knowledge and progress. Datasets should be able to link seamlessly to any research articles they support. Their metadata should be compatible with bibliographic management and citation systems (e.g. CrossRef or Ref Works), and be formatted for crawling by abstracting and indexing services. After all, you want to be able to find other people’s datasets, manage them in your own reference manager, and cite them as appropriate. So, you’d want your own dataset to be positioned for the same discoverability and ease of use.

How can you achieve all this? It sounds daunting, but it’s actually pretty straightforward and simple. You’ll want to select a data publishing tool or repository that is built around both preservation and discoverability. It should:

- Offer you a stable location or DOI (which will provide a persistent link to your data’s location).

- Help you create sufficient metadata to facilitate transparency and reproducibility.

- Optimize the metadata for search engines.

You can learn about a variety of specific tools through the Research Data Management program website, on their Data Preservation and Archiving page. Briefly, here are some good options:

Sample tools

- Dryad: Dryad is an open-source, research data curation and publication platform. UC Berkeley Library is a proud partner of Dryad and offers Dryad as a free service for all UC Berkeley researchers to publish and archive their data. Datasets published in Dryad receive a citation and can be versioned at any time. Dryad is integrated with hundreds of journals and is an easy way to both publish data and comply with funder and publisher mandates. Check out published datasets or submit yours at: https://datadryad.org/stash.

- Figshare: Figshare is a multidisciplinary repository where users can make all of their research outputs available in a citable, shareable and discoverable manner. Figshare allows users to upload any file format to be made visualisable in the browser so that figures, datasets, media, papers, posters, presentations and filesets can be disseminated. Figshare uses Datacite DOIs for persistent data citation. Users are allowed to upload files up to 5GB in size and have 20 GB of free private space. Figshare uses Amazon Web Services - backups are performed on a daily basis, which are kept for 5 days.

- re3data: re3data.org is a global registry of research data repositories that covers research data repositories from different academic disciplines. It presents repositories for the permanent storage and access of data sets to researchers, funding bodies, publishers and scholarly institutions. re3data.org promotes a culture of sharing, increased access and better visibility of research data. The registry went live in autumn 2012 and is funded by the German Research Foundation (DFG).

To explore others, check out OpenDOAR, the Directory of Open Access Repositories.

We also recommend that, if your chosen publishing tool enables it, you should include your ORCID (a persistent digital identifier) with your datasets just like with all your other research. This way, your research and scholarly output will be collocated in one place, and it will become easier for others to discover and credit your work.

What does it mean to license your data for reuse?

Uploading a dataset — with good metadata, of course! — to a repository is not the end of the road for shepherding one’s research. We must also consider what we are permitting other researchers to do with our data. And, what rights do we, ourselves, have to grant such permissions — particularly if we got the data from someone else, or the datasets were licensed to us for a particular use?

To better understand these issues, we first have to distinguish between attribution and licensing.

Citing datasets, or providing attribution to the creator, is an essential scholarly practice.

The issue of someone properly citing your data is separate, however, from the question of whether it’s permissible for them to reproduce and publish the data in the first place. That is, what license for reuse have you applied to the dataset?

The type of reuse we can grant depends on whether we own our research data and hold copyright in it. There can be a number of possibilities here.

- Sometimes the terms of contracts we’ve entered into (e.g. funder/grant agreements, website terms of use, etc.) dictate data ownership and copyright. We must bear these components in mind when determining what rights to grant others for using our data.

- Often, our employers own our research data under our employment contracts or university policies (e.g. the research data is “work-for-hire”).

Remember, the dataset might not be copyrightable to begin with if it does not constitute original expression. We could complicate things if we try to grant licenses to data for which we don’t actually hold copyrights. For an excellent summary addressing these “Who owns your data?” questions, including copyright issues, check out this blog post by Katie Fortney written for the UC system-wide Office of Scholarly Communication.

What’s the right license or designation for your data?

To try to streamline ownership and copyright questions, and promote data reuse, often data repositories will simply apply a particular “Creative Commons” license or public domain designation to all deposited datasets. For instance:

- Dryad and BioMed Central repositories apply a Creative Commons Zero (CC0) designation to deposited data — meaning that, by depositing in those repositories, you are not reserving any copyright that you might have. Someone using your dataset still should cite the dataset to comply with scholarly norms, but you cannot mandate that they attribute you and cannot pursue copyright claims against them.

It’s worth considering what your goals are for sharing the data to begin with, and selecting a designation or license that both meets your needs and fits within whatever ownership and use rights you have over the data. We can help you with this. Ambiguity surrounding the ability to reuse data inhibits the pace of research. So, try to identify clearly for potential users what rights are being granted in the dataset you publish.

Questions?

Please contact us at schol-comm@berkeley.edu.

Economic overview

Basics of scholarly publishing

The scholarly communication landscape is impacted by various shifting economic forces, such as changes in:

- Publishing platforms and markets (e.g. emergence of open access business models, consortial funding for subscriptions, funder publishing platforms)

- Ways research is conducted (e.g. social research networks fostering global collaboration)

- Public policies (e.g. open access mandates, copyleft licensing models)

In the traditional publishing model, scholars produce and edit research and manuscripts, which publishers then evaluate, assemble, publish, and distribute. Libraries at the institutions where scholars are employed then pay for subscriptions to license or purchase this content that researchers have created. Typically these are large subscription packages with academic publishers that encompass dozens if not hundreds of journal titles.

The costs of scholarly journal subscriptions have risen unsustainably over many decades, outstripping inflation even relative to higher education markets. As costs have risen, so has the portion of the global research community operating without full access to the scholarly record (including nearly all U.S. universities). The open access (OA) movement, discussed elsewhere on these pages (see Open Access Publishing), is in part a response to this affordability crisis.

Open access overview

In an OA world, libraries would not be paying for these out-of-reach subscriptions. But, if academic publishers are still distributing scholarly content through traditional journal systems, they of course would want some other form of cost recovery if subscriptions are off the table. OA publishing models differ in how and whether they address this issue.

As we discuss in the Open Access Publishing section, two of the predominant open access publishing models are “Gold Open Access” and “Green Open Access.”

Gold open access

Gold OA provides immediate access on the publisher’s website. Some Gold OA publishers recoup production costs via charges for authors to publish (“article processing charges” or “book processing charges”) rather than having readers (or libraries) pay to access and read it. This is a system in which “author pays” rather than “reader pays.” The charges to be paid by the author can come from many sources, such as: research accounts, research grants, the university, the library, scholarly societies, and consortia. Production costs can also be offset by the sale of memberships, add-ons, and enhanced services by the publisher.

Green open access

Also known as self-archiving, in the Green OA model authors continue to publish as they always have in all the same journals. Once the article has been published in a traditional journal, however, the author then posts the “final author version” of the article to an institutional or subject matter repository. Those uploaded manuscripts are open to all to be read. Often, publishers do not allow the formatted publication version to be deposited, but instead only permit the unformatted “post-print” (refereed) or “pre-print” (author submitted) version to be uploaded.

The (real) non-economic value of OA

While open access publishing has the potential to reduce costs, this is not the only (or even the main) driving force behind open access advocacy. The benefits to individual scholars, related institutions, scholarly communication, and the general researching public are also primary motivating factors.

Open access literature is free, digital, and available to anyone online. Providing greater access to scholarship can help attract more readers and build impact.

Moreover, in most cases open access literature is also free of downstream copyright restrictions apart from attributing the original author. This type of OA literature can be reused, remixed, and built upon to further spur innovation and progress.

New open access publishing models are continuing to emerge and be evaluated for sustainability. We have much more to say about them and all things open access on our Open Access page.